Wave equation

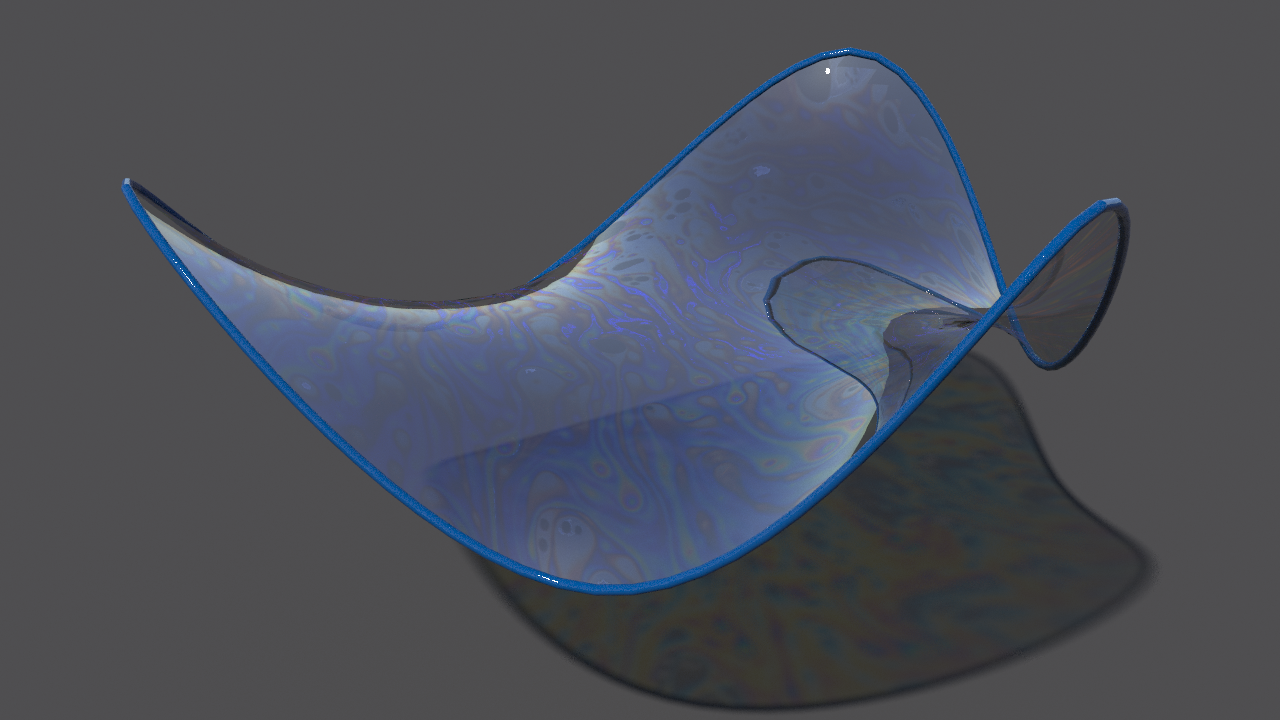

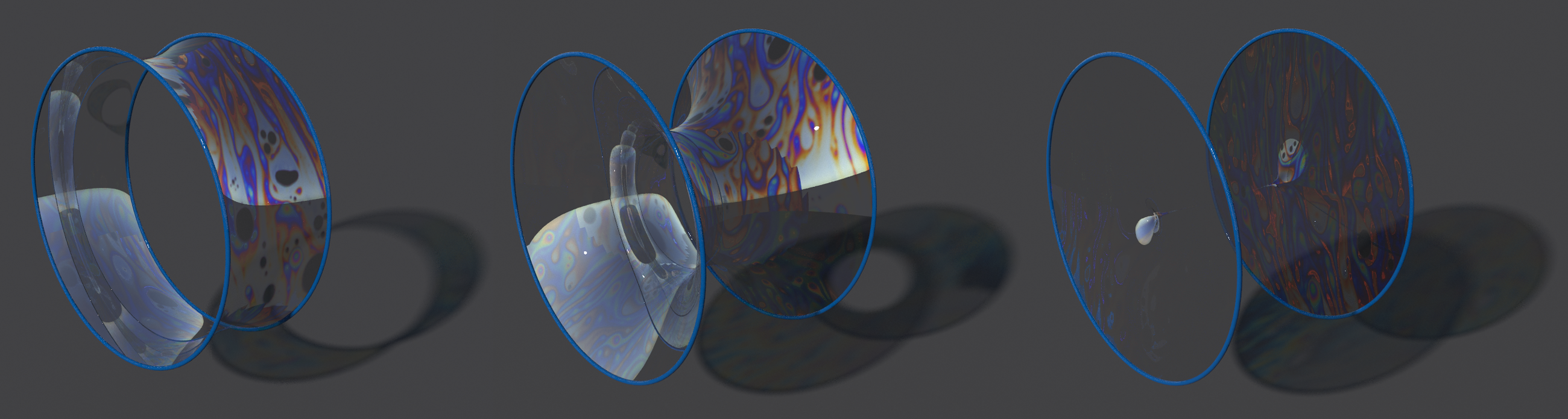

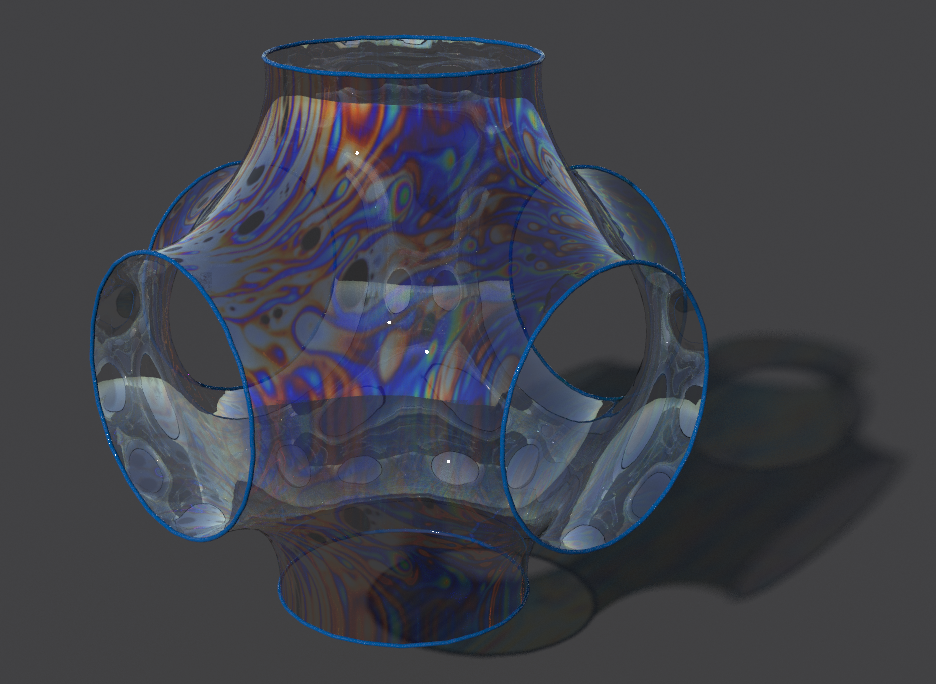

Let $M$ be a surface and $f : \mathbb{R} \times M \rightarrow \mathbb{R}$ a function. The wave equation is given by:

\[\ddot{f} = \Delta f.\]

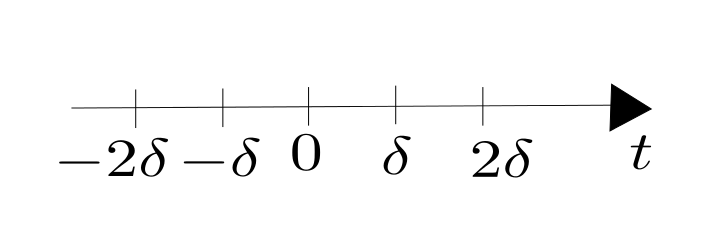

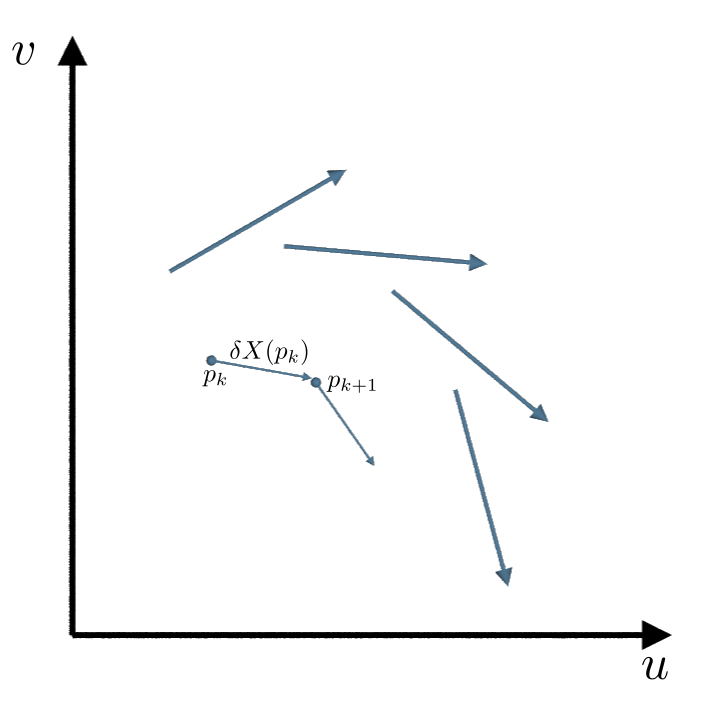

After the space discretization we have $f:\mathbb{R} \times V \rightarrow \mathbb{R},$ $p:= \left(\begin{matrix} f_1 \\ \vdots \\ f_n \end{matrix} \right) \in \mathbb{R}^n$ and $\Delta \in \mathbb{R}^{n \times n}$, where $n:=|V|$ denotes the number of vertices. Therefore, the wave equation becomes a linear system of second order ODE’s. In the last lecture we saw that the Verlet method works well for second order ODE’s. Using this for the Wave equation we get:\[\frac{p_{k-1}-2 p_k + p_{k+1}}{\delta^2} = \Delta p_k,\]

where $\delta$ is the time step.

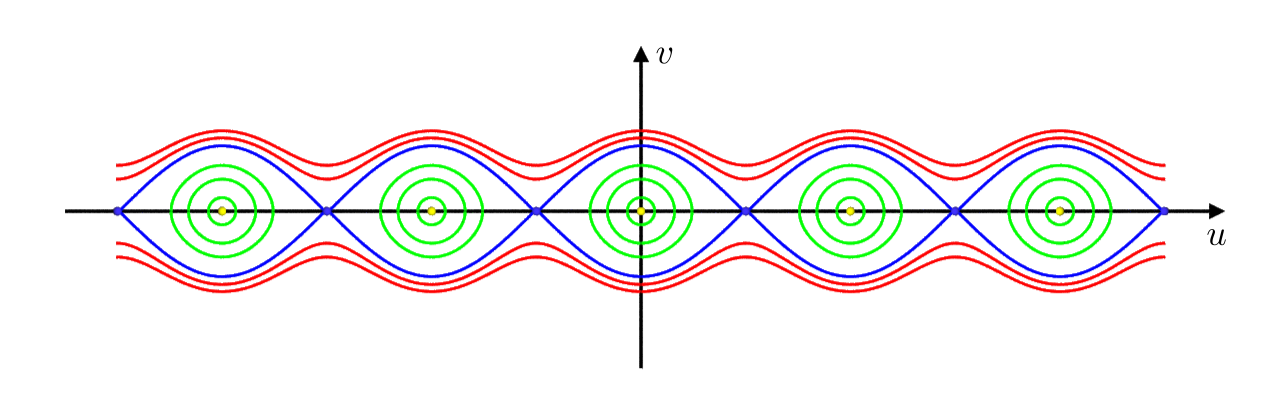

For initial data $p_0,p_1$, the hope is that the energy of the system neither grows exponentially nor that the system comes to a halt. A classical example for the $1-$dimensional wave equation is an oscillating string. This leads in analogy to music instruments to two kinds of initial data:

- Cembalo initial data: \[p_0 = p_1,\] here the string gets plucked, i.e. at time $t=0$ the oscillation is $p_o$ and the speed is zero.

- Piano initial data: \[p_0 =0,\] here the string gets struck i.e. at time $t=0$ the oscillation is zero but the speed is not.

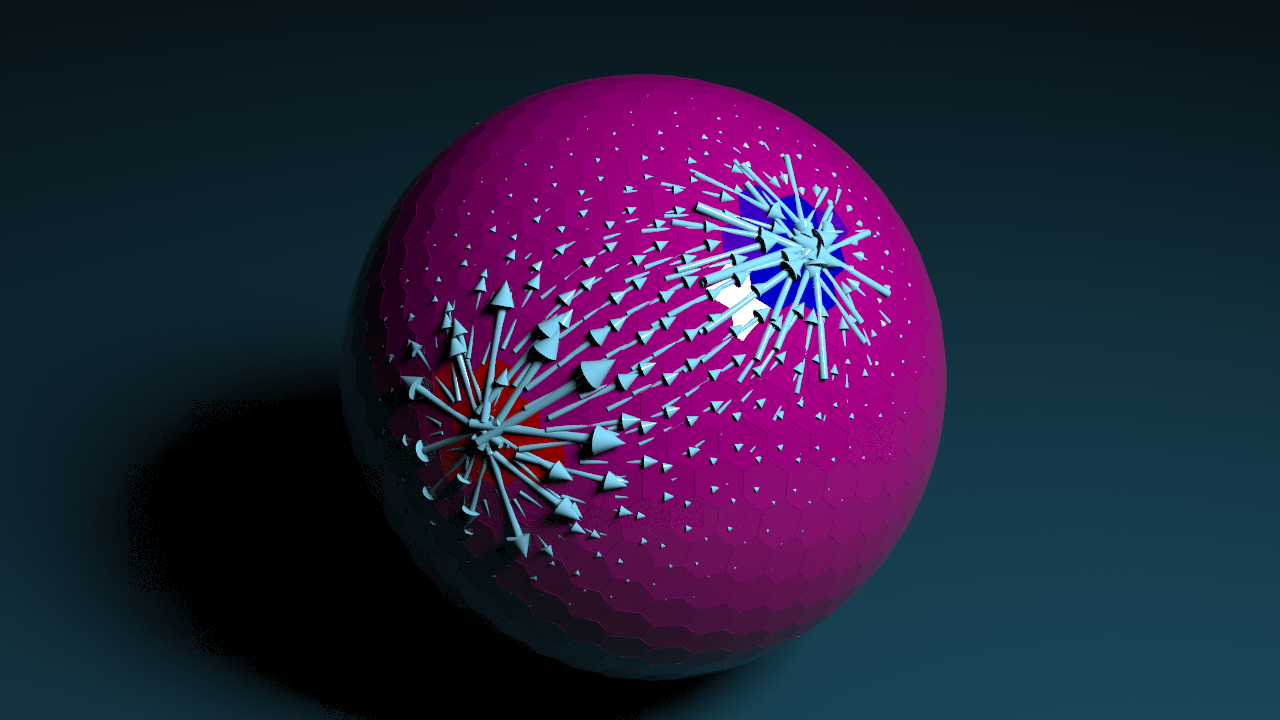

A different way to find solutions of the wave equations is to calculate the eigenfunctions of the laplace operator, $g:M \rightarrow \mathbb{R}$:

\[\Delta g= -\omega ^2 g.\]

Since the laplace operator is symmetric and negative definite, $\Delta$ is diagonalizable and there exist eigenvalues $\lambda_i := – \omega ^2_i$ with corresponding eigenfunctions $g_i$ such that such that:

\[0 = w_o^2 < w_1^2< w_2^2 \ldots.\]

Note that $0$ zero is an eigenvalue, because the kernel of $\Delta$ consists of the constant functions. If we computed the eigenfunctions, solutions of the wave equation can be constructed in the following way:

\[f(t,p) := \sum_{i =1}^n \left( a_i \cos(\omega_i t) + b_i \sin(\omega_i t)\right) g_i(p) , \quad \quad \quad a_i,b_i \in \mathbb{R}.\]

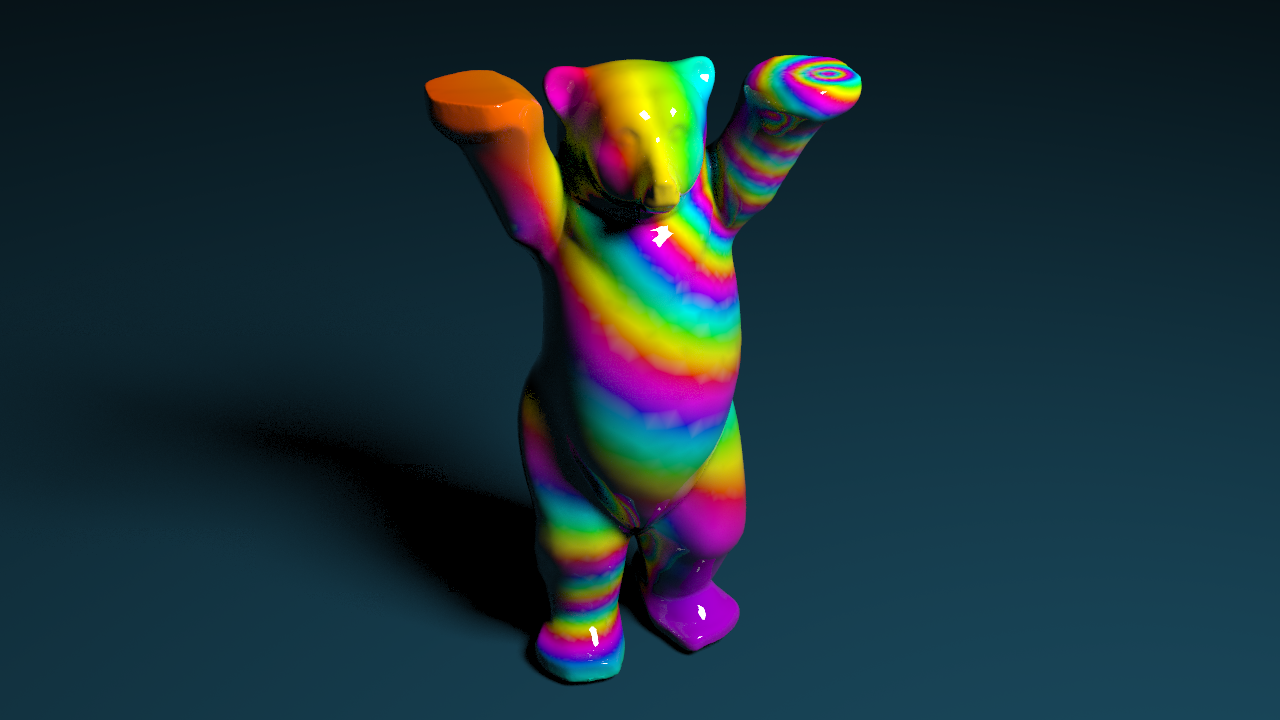

The coefficients $a_i$, $b_i$ have to be chosen in a way such that the initial conditions are satisfied. Note that for different surfaces the spectrum of eigenvalues is different. A sphere “sounds” different then the bunny. The disadvantage of this method is, that finding the eigenfunctions of the surface and computing the coefficients for the initial conditions can be very expensive, especially if the surface has many vertices.

Heat equation

For $f : \mathbb{R} \times M \rightarrow \mathbb{R}$, the heat equation is given by:

\[\dot{f} = \Delta f.\]

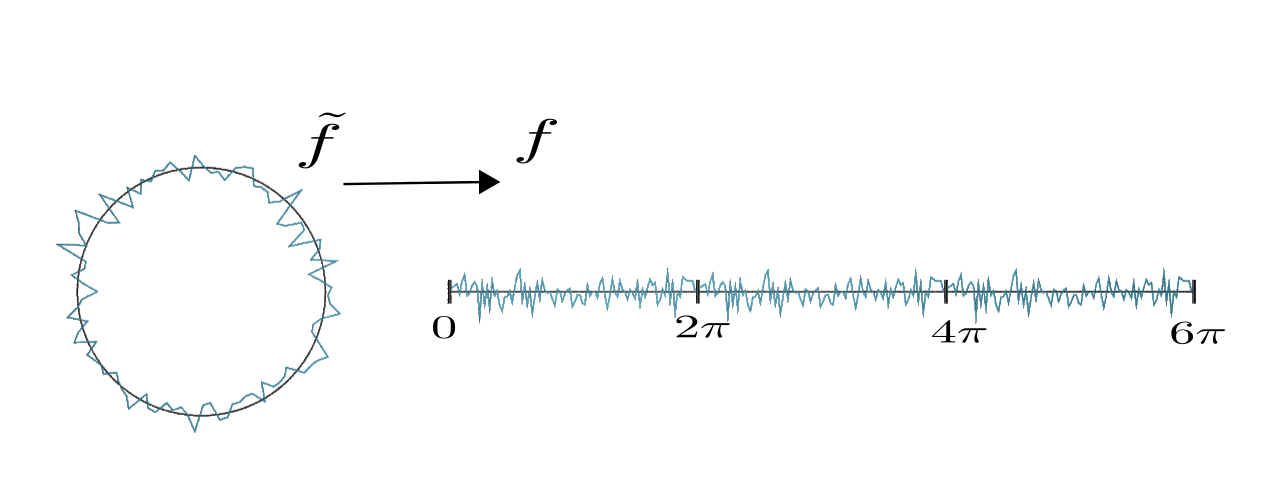

We will start our investigation by considering the case $M = \mathbb{S}^1.$ There we can describe a function $\tilde{f} : \mathbb{S}^1 \rightarrow \mathbb{R}$ by an periodic function $f : \mathbb{R} \rightarrow \mathbb{R},$ with $f(x + 2\pi) = f(x).$

Our aim is it to use the Euler forward method to solve the heat equation on $\mathbb{S}^1$, therefore we discretize the space, i.e. the interval $[0,2\pi]$, as follows:

\[x_k = \frac{2\pi k}{n}, \quad \quad x_{k+1} = x_k + h, \quad \quad \mbox{with }\quad h = \frac{2\pi}{n}.\]

In an earlier lecture we discussed discrete periodic functions and defined a canonical basis for them, such that we can write the components of $p_j = \left(\begin{matrix} u_1 \\ \vdots \\u_{2n-1} \end{matrix} \right)$ as

\[u_k = \frac{1}{\sqrt{2 \pi}}\sum_{m = -n}^n a_m e^{\frac{i 2\pi km}{n}} \quad \quad a_m \in \mathbb{C}.\]

Since we are considering real valued functions there has to hold $a_m = \bar{a}_{-m}$ for all $m \in \left\{-n, \ldots,0,\ldots, n\right\}$.

If we now use the Euler forward method we get:

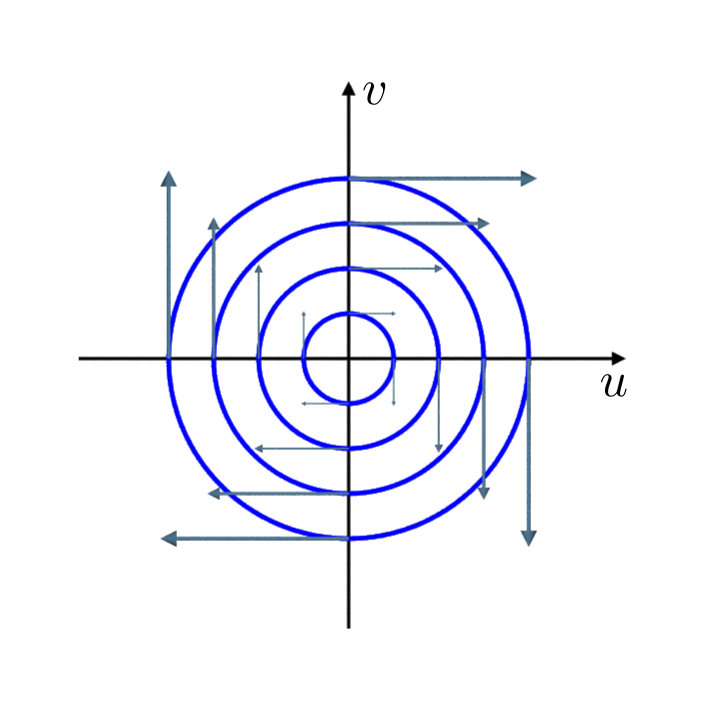

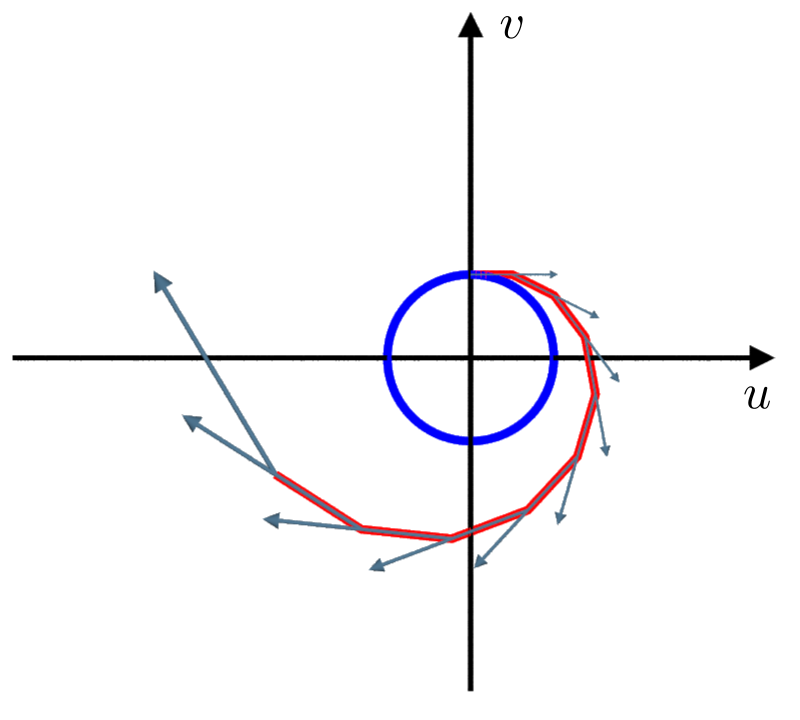

\[p_{j+1} = p_j + \delta \Delta p_k,\]

where $\delta$ denotes the time step size. The discrete laplacian on $\mathbb{S}^1$ can be considered as an approximation of the second spatial derivative and is defined as:

\[\left( \Delta u\right)_k = \frac{u_{k-1} -2 u_k + u_{k+1}}{h^2}.\]

By linearity it is enough to consider the case $u_k = e^{\frac{i 2\pi km}{n}}$:

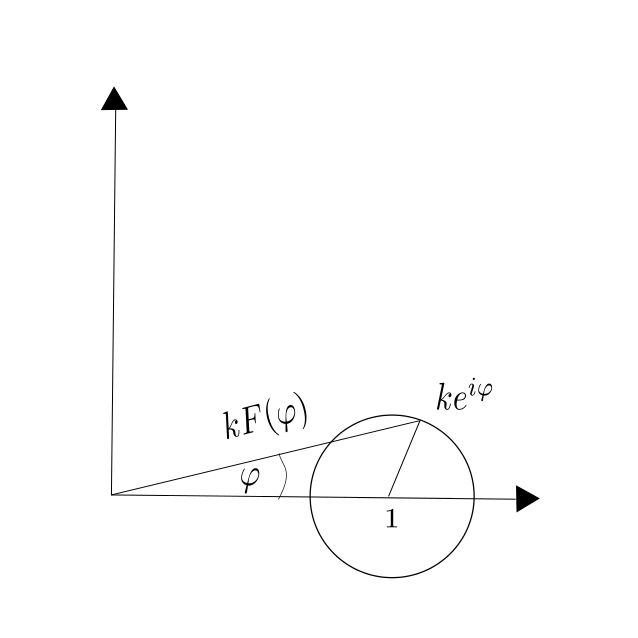

\begin{align} \tilde{u}_k & = u_k +\left( \Delta u\right)_k \\ & = e^{\frac{i 2\pi km}{n}} + \delta \frac{ e^{\frac{i 2\pi (k-1) m}{n}}-2 e^{\frac{i 2\pi km}{n}} + e^{\frac{i 2\pi (k+1)m}{n}}}{h^2} \\ & = \left(1 + \delta \frac{e^{\frac{i 2\pi m}{n}} + e^{\frac{-i 2\pi m}{n}} + 2}{h^2} \right) e^{\frac{i 2\pi km}{n}} \\ & = \left( 1 + \frac{2\delta}{h^2}\left( \cos \left(\frac{2 \pi m}{n}\right) -1\right)\right) u_k. \end{align}

The system will blow up after a certain time if there is an $m$ such that:

\begin{align} & \left| 1 + \frac{2\delta}{h^2}\left( \cos \left(\frac{2 \pi m}{n}\right) -1\right)\right| > 1 \\ \Leftrightarrow \quad &\frac{2\delta}{h^2}\left( \cos \left(\frac{2 \pi m}{n}\right) -1\right) < -2.\end{align}

The left hand side reaches its minimum if $m = \frac{n}{2}$ i.e $\cos \left(\frac{2 \pi m}{n}\right) = -1$ and we get:

\begin{align} &\frac{2\delta}{h^2}> 1 \\ \Leftrightarrow \quad & \delta > \frac{h^2}{2}.\end{align}

This gives us a connection between the space and time discretization that is not wanted. If we use the Euler forward method to solve the heat equation on $\mathbb{S}^1$ and we refine the time step by the factor of $10$, we have to refine the space discretization by the factor of $50$, to avoid the solution to blow up. Therefore it makes more sense to use the Euler backward method for this problem.

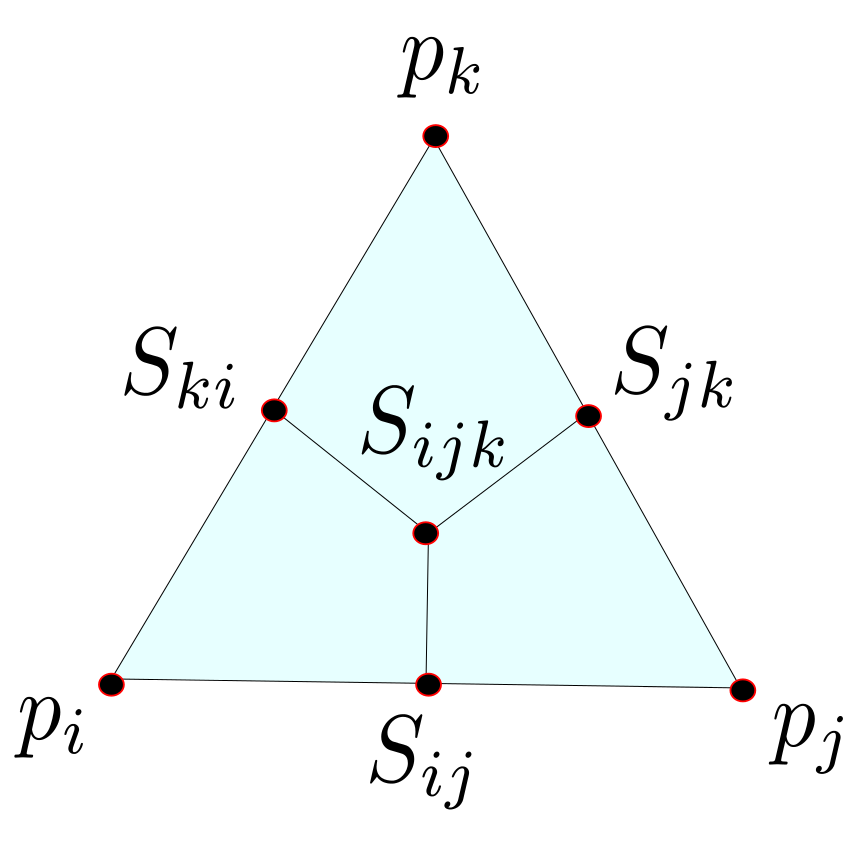

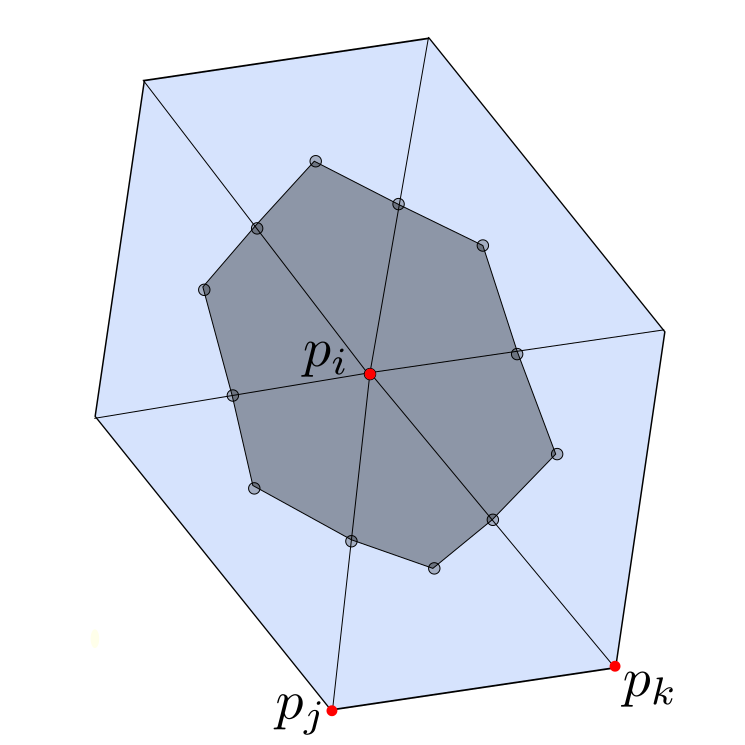

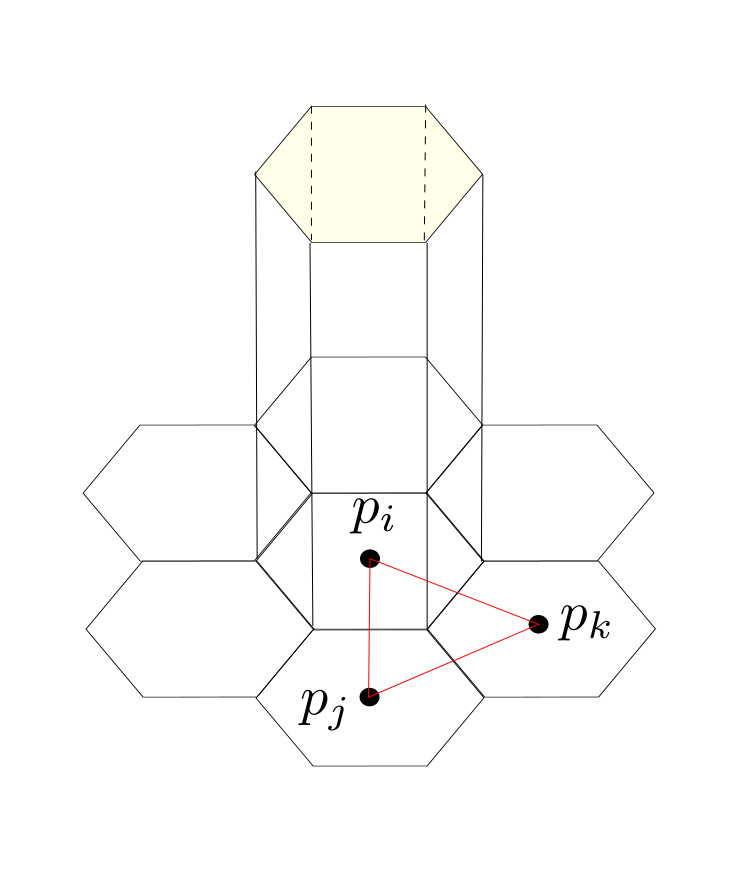

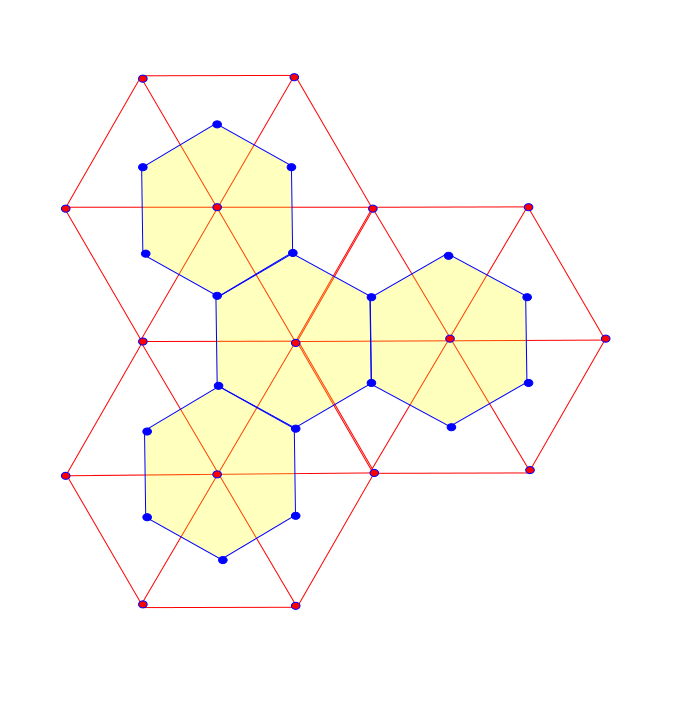

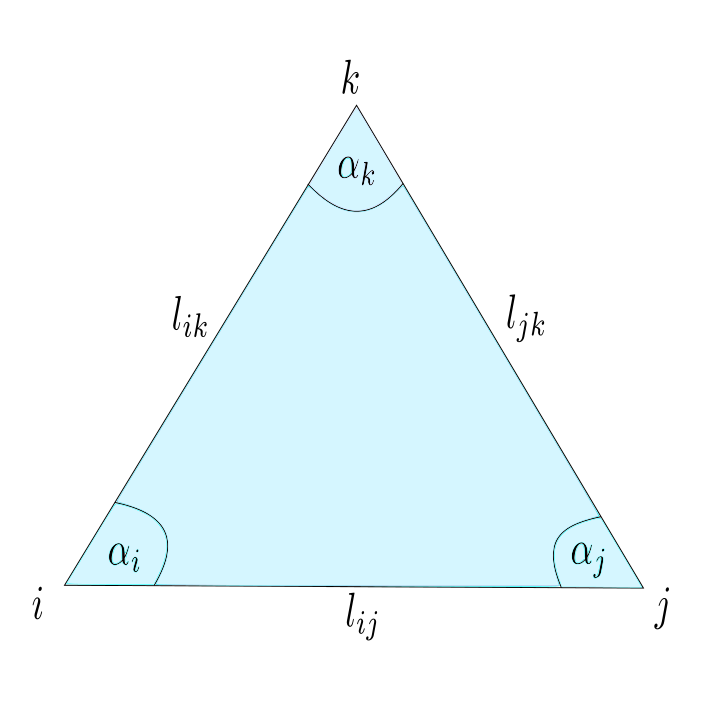

Note that every realization $p:V \rightarrow \mathbb{R}^3$ induces a metric on the underlying triangulated surface. On the other hand a triangulated surface with metric has several realization in $\mathbb{R}^3$, such that the metrics coincide. On a triangulated surface with metric every triangle has the structure of an euclidean triangle in $\mathbb{R}^2$, that allows us to interpolate $f:V\rightarrow \mathbb{R}$ to a piecewise affine function $\tilde{f}: C\left( \Sigma\right) \rightarrow \mathbb{R}$ and define the Dirichlet energy $E_D(f)$ as in the last

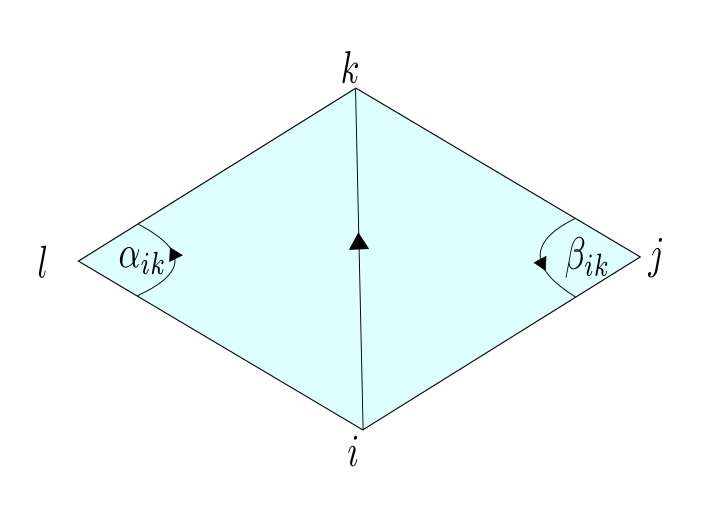

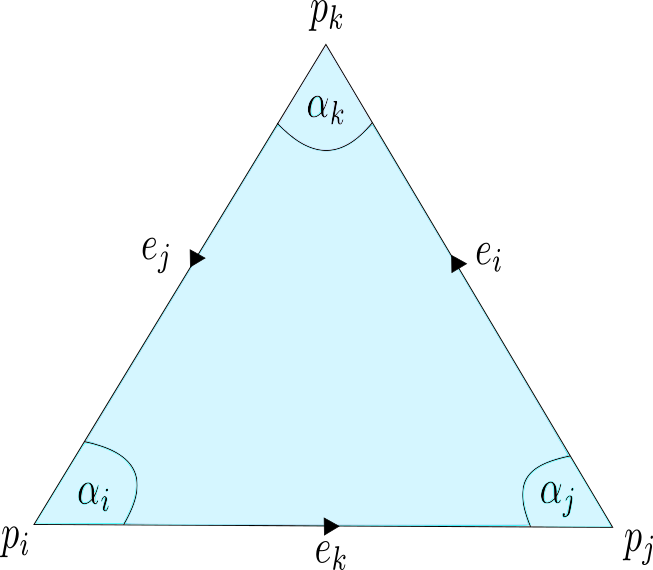

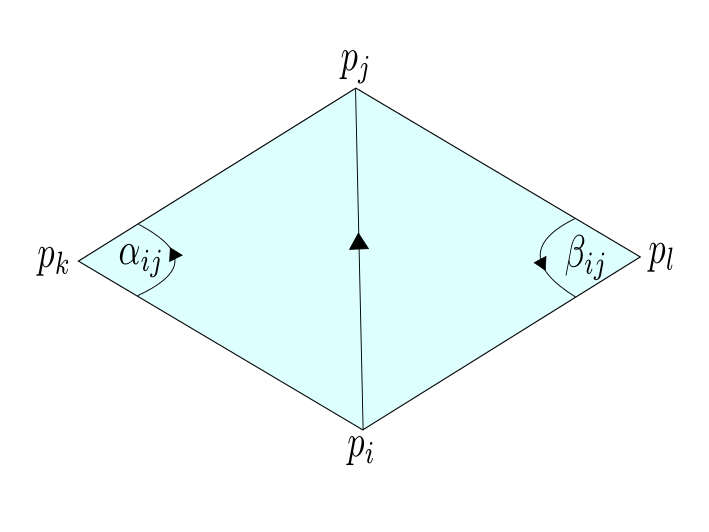

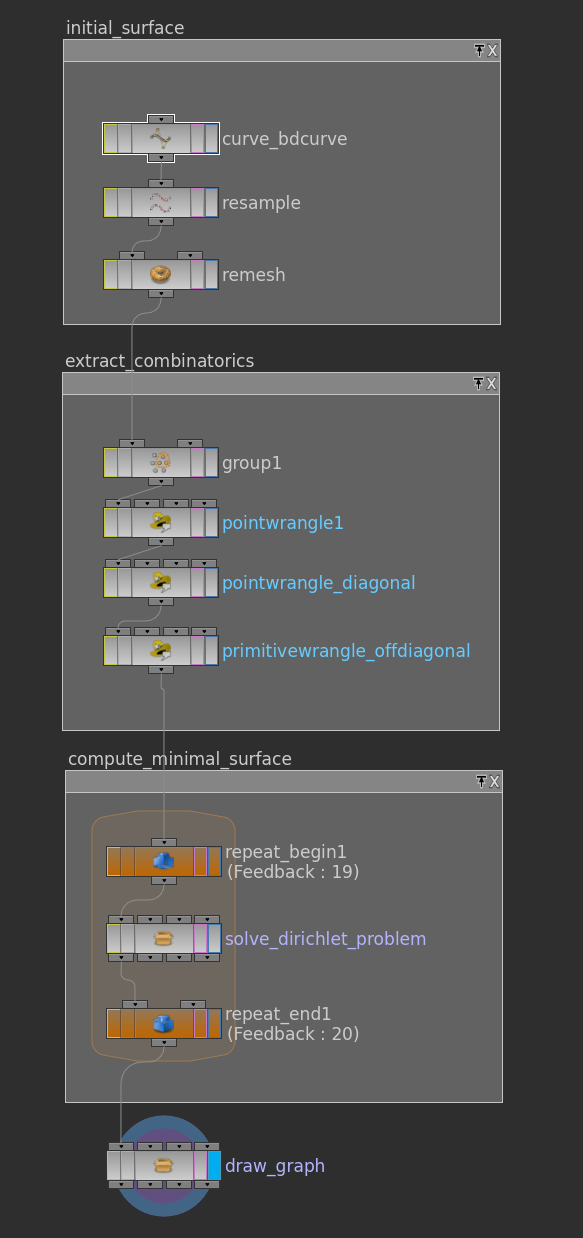

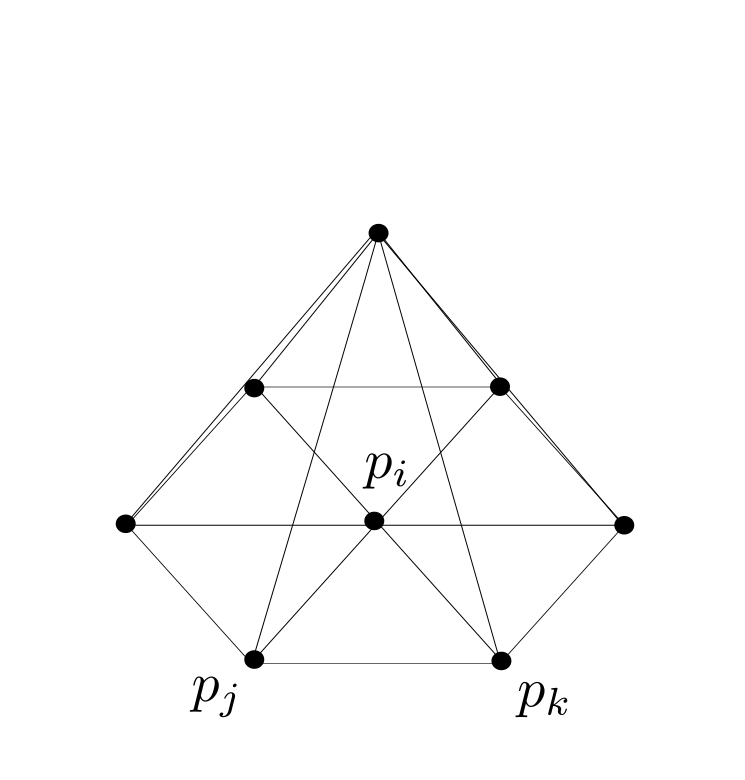

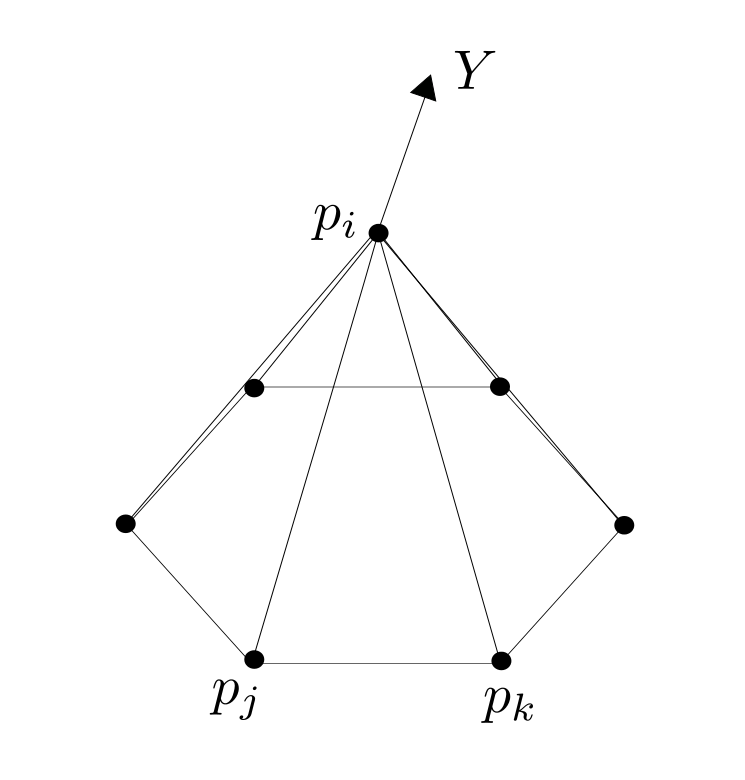

Note that every realization $p:V \rightarrow \mathbb{R}^3$ induces a metric on the underlying triangulated surface. On the other hand a triangulated surface with metric has several realization in $\mathbb{R}^3$, such that the metrics coincide. On a triangulated surface with metric every triangle has the structure of an euclidean triangle in $\mathbb{R}^2$, that allows us to interpolate $f:V\rightarrow \mathbb{R}$ to a piecewise affine function $\tilde{f}: C\left( \Sigma\right) \rightarrow \mathbb{R}$ and define the Dirichlet energy $E_D(f)$ as in the last  Fix $i \in \overset{\circ}{V}$ and let $ t \mapsto p_i(t), $ $ t \in (- \epsilon ,\epsilon) $ be a variation, that only moves $p_i$ and $Y:= p_i'(o). $ Further we define $\hat{F} := \left\{ \{i,j,k\} \in F \big \vert \mbox{ positive oriented} \right\}$ to be the set of positive oriented triangles that contain the vertex $i$. We have to show that there holds:

Fix $i \in \overset{\circ}{V}$ and let $ t \mapsto p_i(t), $ $ t \in (- \epsilon ,\epsilon) $ be a variation, that only moves $p_i$ and $Y:= p_i'(o). $ Further we define $\hat{F} := \left\{ \{i,j,k\} \in F \big \vert \mbox{ positive oriented} \right\}$ to be the set of positive oriented triangles that contain the vertex $i$. We have to show that there holds: